AI contains many big conceptual frameworks. People create predictive models every day which detect stock prices, fraud and translate alien languages. Most of these models remain within laboratory settings because they fail to progress beyond their development phase.

Notebooks hold these programs deep in a maze of code with developers spending their time attempting to debug and searching Stack Overflow. These models exist exclusively inside notebooks without any public exposure. They never make an impact.

Why? The skill of model construction differs substantially from the process of deploying those models into operational use. MLOps (Machine Learning Operations) serves as the solution to address this challenge.

MLOps serves as the demanding coach which transforms novice AI models into production-ready combatants similar to boxers. The connection between conceptual ideas leads them to actual world applications.

The majority of AI models experience failure because of this fundamental problem.

You created an AI model after conducting its development. It’s brilliant. This system demonstrates frightening precision when it forecasts stock market changes. You show it to your team. They’re impressed.

Following these demonstrations, a team member poses the question about real-world implementation.

The dream begins to dissolve at this moment.

The majority of AI projects meet failure at this particular stage. The development environment produces excellent results from the model yet when you implement it in production the system starts to malfunction.

• It doesn’t scale.

• It crashes under real-world data.

• It takes forever to respond.

A single user limits how much this system can operate.

After the development phase concludes the promising AI product becomes obsolete and disappears into unused Jupyter notebooks without reaching real-world implementation.

That’s why MLOps exists. The MLOps methodologies function as the operational methods that extract experimental models for successful deployment into real-world operations. MLOps ensures that machine learning models authenticate their testing performance in live high-stakes conditions because it positions theory to action for reliable deployment.

The rest of this tutorial demonstrates how FastAPI together with Kubernetes transforms your model into a production-level AI system.

Step 1: Building the Model

We must first develop something useful for deployment to occur.

We are developing an AI model which detects financial fraud as its main application. The model receives transaction information to determine whether the transactions are genuine or fraudulent.

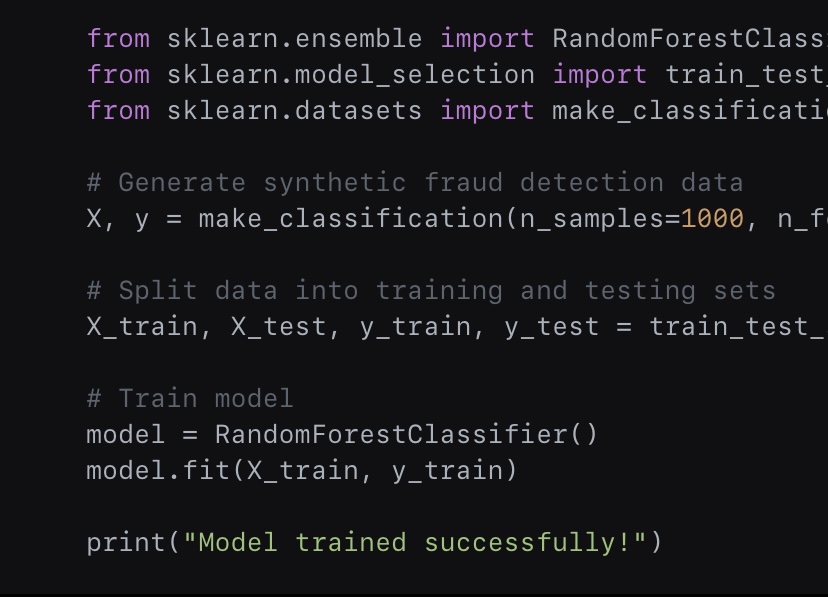

Here’s a quick example using Python and Scikit-learn:

Great! The model performs fraud detection operations. At present the fraud detection model exists as a Python object. The model does not have the capability to communicate with external systems. The system lacks the ability to receive demands from end users.

That’s where FastAPI comes in.

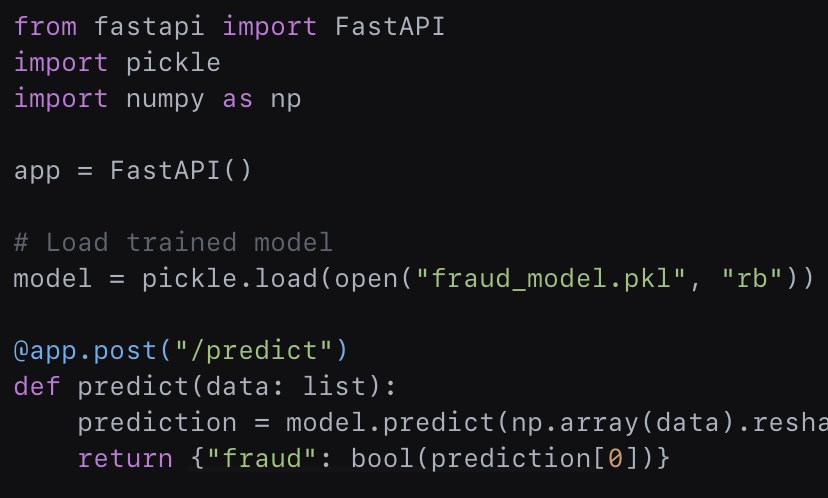

Step 2: Wrapping the Model with FastAPI

FastAPI serves as the second step to convert our model into an operational API.

Your model receives a communication tool through FastAPI which enables it to exchange data with users through an API interface.

Our fraud detection model requires this transformation to become usable through an API interface.

The API enables any user to submit transaction data for receiving fraud prediction results.

Our API operates only from our local machine despite this fundamental issue. Our laptop shutdown destroys everything related to the system.

Time to deploy it properly.

Step 3: Deploying with Kubernetes

Our AI model requires testing for its ability to process thousands of users before it can operate without failures. We need:

The system supports increasing user numbers by allocating additional resources.

✔️ Reliability (no downtime).

✔️ Automation (no manual restarts).

The solution emerges with Kubernetes which enables AI model operation at massive scale.

The following steps explain our deployment process of FastAPI using Kubernetes:

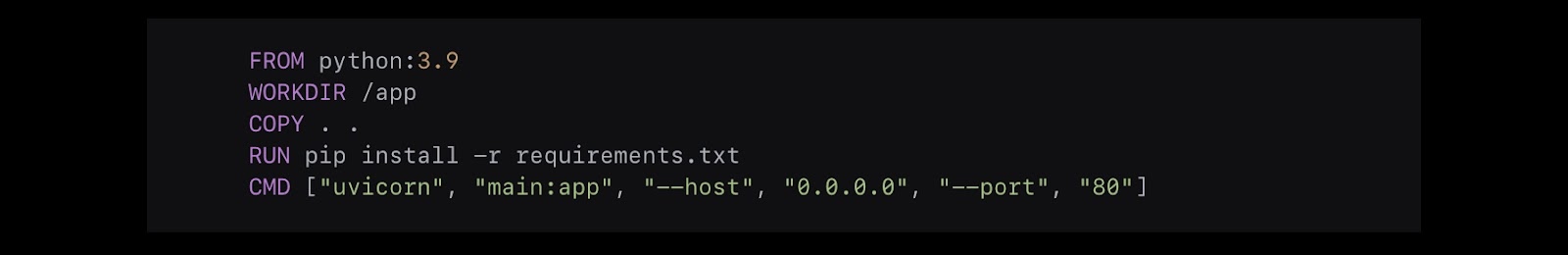

1. Create a Dockerfile

We begin by building our app into docker containers through Docker.

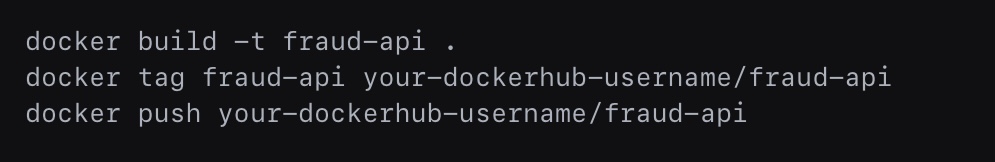

Now we build and push the Docker image to a registry:

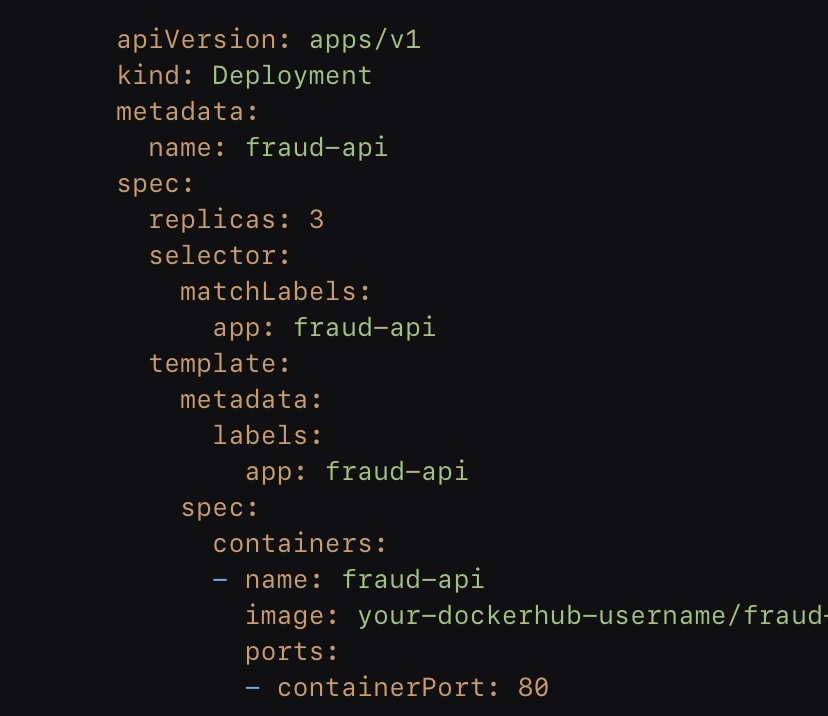

2. Create a Kubernetes Deployment

We now create a Kubernetes YAML file to deploy our app:

Then, deploy it to Kubernetes:

Boom. Through deployment our AI model operates across multiple servers to process thousands of requests smoothly.

Final Thoughts: Why This Matters

Building an AI model is easy. The actual challenge emerges during proper deployment of these systems.

MLOps enables you to develop models which succeed when deployed to the real world applications.

FastAPI enables your models to speak.

The combination of Kubernetes enables your models to become invincible.

The majority of AI initiatives fail to launch because their developers never advance past the development stage. The knowledge you acquired today shows you how to develop models from their initial concept all the way to commercial deployment.

Do you intend to let your AI model slowly die in Jupyter notebook space? Will you deploy it with professional expertise or let it remain in its current state?

The ball is in your court.